6 Different Conversation Types For Better Generative AI Results and User Experience

Oh: There is no "optimal" or perfect prompt length. Go figure.

Morning y’all!

I spent much of my morning curating my Twitter feed, namely my content and interest settings, which buried deep in their maze of options. If you want a visual on how to get there then I shared these images on Twitter.

¯\_(ツ)_/¯

Today’s post is a bit more academic in nature but there are some practical applications here for both the user and for folks (and businesses) that are looking to build generative artificial intelligence products. I like to stay on top of these things, especially as I personally experiment with a few smaller projects myself and like to capture this type of useful data in this newsletter’s archive.

Have a great day folks!

※\(^o^)/※

— Summer

The Nielson Norman Group, a research organization focused on user experience, recently spent some time studying the different types of GenAI bots and how users engagee with them. The summary of the study is as follows:

When interacting with generative-AI bots, users engage in six types of conversations, depending on their skill levels and their information needs. Interfaces for UI bots should support and accommodate this diversity of conversation styles.

I’ve pulled out a few of the more interesting tidbits and some useful visuals that you might want to save for future use and review.

A few key points:

Different conversation types serve distinct needs and demand varied UI designs.

There doesn’t appear to be an “optimal” conversation length.

The second point was important to note since I hear so much noise out there about the “perfect” prompt length but the reality is that that’s simply not true. Context is everything and the outcomes you want will ultimately determine how much — or little — time you spend manicuring the prompt.

The six different conversation types are with a brief explanation, the first two being shorter chats with fewer query refinements while the last four are longer in nature and many query refinements:

Search — Resembles a search-engine query with one or more keywords; it is not followed up by other queries.

Pinpointing — Contains one or more few very specific prompts with minute details about context and desired output format.

Funneling — Starts broadly with an underspecified query that is further narrowed down with subsequent prompts that specify additional constraints.

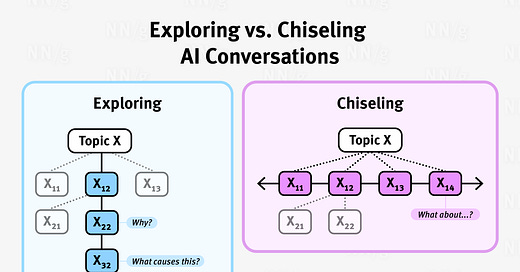

Exploring — Starts with a broad query and explores in depth a new knowledge domain; subsequent queries build on the concepts and facts from the AI’s response.

Chiseling — Investigates different facets of one or several related topics.

Expanding — Starts with a narrow topic that gets expanded, often because the results from the original prompt were unsatisfactory.

A high-level visual of these six might be useful:

I do appreciate some of the thoughts they have around building good products — a few of these quips that I found useful:

Consider allowing users to easily switch to a search-engine mode or access search-engine results, like Bard does.

To reduce the articulation load in funneling conversations, the bot should ask helping questions that narrow down underspecified queries. For example, you may add phrasing such as Ask me questions if you need additional information, to get the bot to help you articulate the different constraints that you may be working with.

Conversations with well-defined information needs (such as pinpointing conversations) rarely benefit from such followup prompts.

Chiseling conversations benefit from suggested followup queries. However, for chiseling conversations, suggested followup prompts should be broad, inquiring about multiple facets of the same topic or about related topics (e.g., How about…?).

Ask the user for specific details about their question, as well as about the format of the answer. Consider giving users examples of the information they could provide in a prompt if the prompt is too vague or underspecified.

When the bot is not able to provide an answer to the user’s query, provide suggested followup prompts that relax some of the criteria in the user’s original question and return an answer.

In general, the higher the number of attempts to do a task, the worse the usability.

The bottom line is that there are some real opportunities to improve the user experience when the product can quickly understand the user’s specific need and intent and then funnel them into a more specific conversational type.

As NNGroup suggests

Bots should attempt to use the length and the structure of the user’s prompt, as well as the complexity of the answer to determine the conversation type early in the exchange and adjust behavior accordingly.

Consequently, instead of providing a generalized, one-size-fits-all approach, it will make the results (and happiness) better thus increasing stickiness and a natural desire to trust the results and the bot on the whole.

Who would have thought? Something to marinate on as users and builders into this new and powerful world.

Go on and prompt strong.

✌(-‿-)✌

— Summer