Training AI at Scale with Meta, Alexa's Fail, and Dream Machine by Luma Labs

A few news and tidbits around the artificial intelligence space.

Morning y’all!

Thursdays are my favorite days and so we’re closing out the week strong — I hope you are too! I hope you’ve gotten most of what you want done and feeling good about the coming weekend.

Take care and we’ll chat soon.

※\(^o^)/※

— Summer

Meta shares how they train large language models at scale:

Meta is facing challenges in training large language models due to the amount of computational power required. This has led to the company rethinking its infrastructure in terms of hardware reliability, failure recovery, efficient training state preservation, and optimal GPU connectivity. Meta is innovating across the infrastructure stack by developing new training software and scheduling algorithms, modifying hardware like the Grand Teton platform, optimizing data center deployments, and exploring new fabrics for network infra.

Alexa hasn’t really been in the picture these days when it comes to AI and for some this means that they’ve really dropped the ball on an obvious opportunity. Issues with technical and organizational design has resulted in dysfunction around execution.

Apple won’t pay OpenAI through cash; rather, it’ll be through distribution. Sidenote: OpenAI has doubled their annualized revenue to $3.4b. Yikes.

Dream Machine, by Luma Labs, is a new AI model that can generate 5-second video clips from text and image prompts. Some are saying it’s better than OpenAI’s Sora.

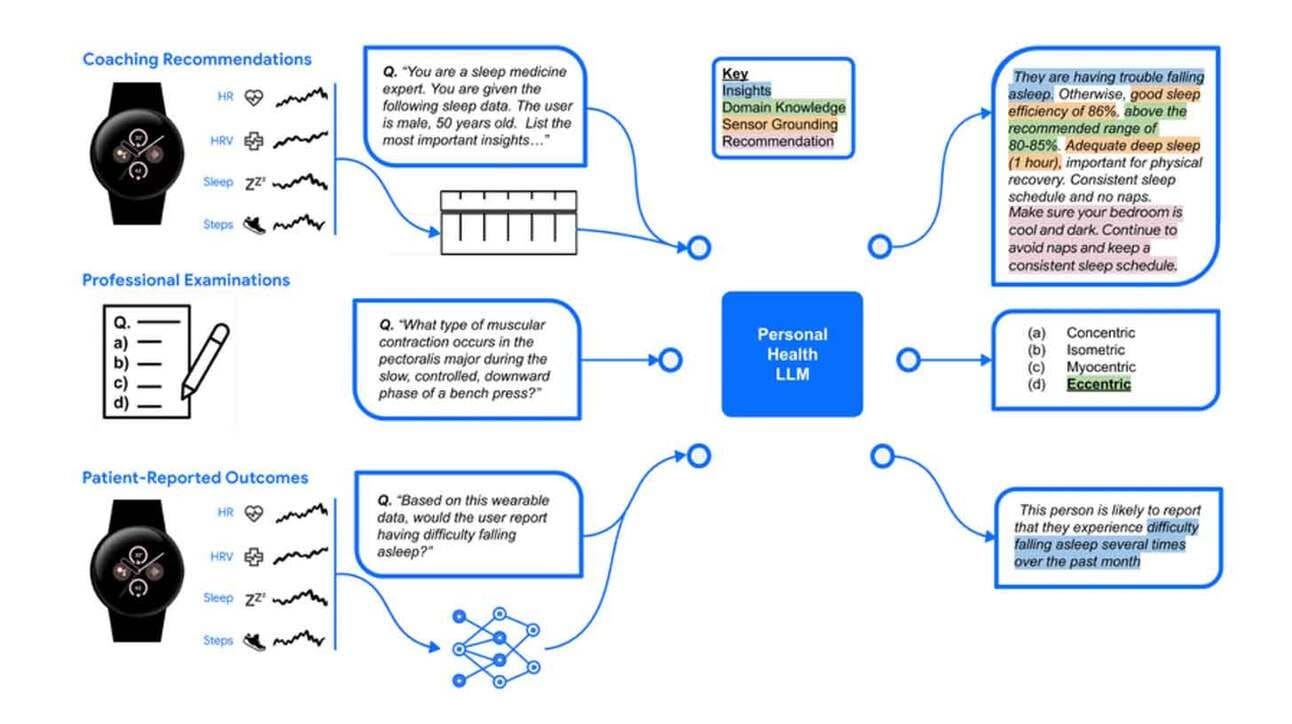

Google released two new research papers that feature health data and wearable devices with AI agents.

Learn how to design AI using thoughtful design.

Dad can’t draw. Use AI to create coloring pages for your kids.

And finally a small app via Google to make letters out of anything.

And that’s a wrap! Have a great one folks.

※\(^o^)/※

— Summer