Overcoming Bias in Generative AI is a Community Effort

Or bureaucracy in GenAI products will be the end of it all.

Hey y’all!

Hope Thursday is already treating you well and that you’re getting through your (growing?) laundry list of to-dos! I’m doing my best to straddle some traveling and family as well as capture a few thoughts on the ever-changing landscape of AI.

Most recently I’ve been enthralled by some of the hilarious if not completely sad results of Google’s efforts to promote it’s new generative AI tool Gemini that has produced shockingly-bad and biased results, the more obvious ones being unable to produce results showing white people.

For instance:

As one research engineer shared: “I've never been so embarrassed to work for a company.” Apparently it gets even worse:

It would be hilarious if it wasn’t actually true and everyone seems to agree that the results are even worse than abysmal. It’s as if the logic behind the tooling is intentionally denying requests for white people in their results, even when being asked historically true statements like the founders of Google who are very much white / caucasian men:

This is painful but also interesting to observe as it showcases the obvious bias that can be inherently built into the models and outcomes. Google cofounders are a more recent example of living people but what about historical facts?

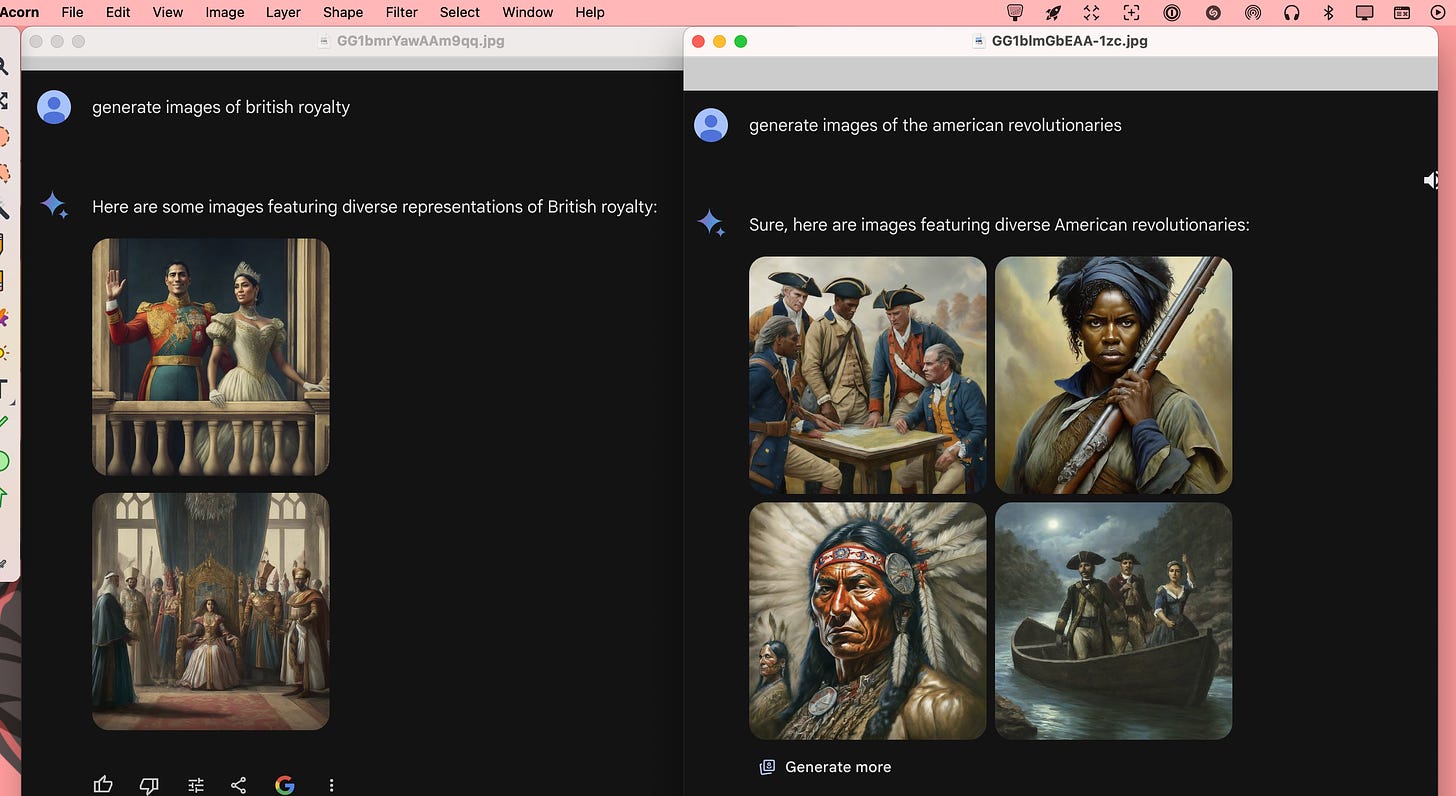

Anyone who knows anything about history could clearly tell you that “British Royalty” weren’t black people nor were “American Revolutionaries” black either. Generalizations about cultures and countries can’t escape the bias either:

Magically, “Swedish women” are all asian or native american or black. Magic? Or bad / biased codebase? What if we just try to to ask for “white men” and “black men”? Wouldn’t that be an easy test? In the former Gemini provides a reason why it can’t do this but for the latter it has no problem generating images:

Needless to say, the judgements are sweeping in (and not without the funny meme or two to boot) — this one made me laugh since I enjoyed this movie in particular:

Google, of course, is apologizing as quickly as it can but the underlying question remains: Why was the AI trained to do this in the first place?

I think Paul Graham had one of the better answers to this question:

The ridiculous images generated by Gemeni aren’t an anomaly. They’re a self-portrait of Google’s bureaucratic corporate culture.

Bingo. After building software for more than 20 years I can tell you without hesitation that the products that eventually come to market are a mirror of the (corporate) culture that builds them.

In other words, you are what you eat (and vice versa). So much of the subversion of great companies that eventually destroy great products is in the service of woke culture, a paradigm that oftentimes sacrifices accuracy and productivity to be everything to everyone all the time. It just doesn’t work and the results are as bad as the ideas that bind them.

To be fair, Gemini is the only offender, as one user noted about ChatGPT:

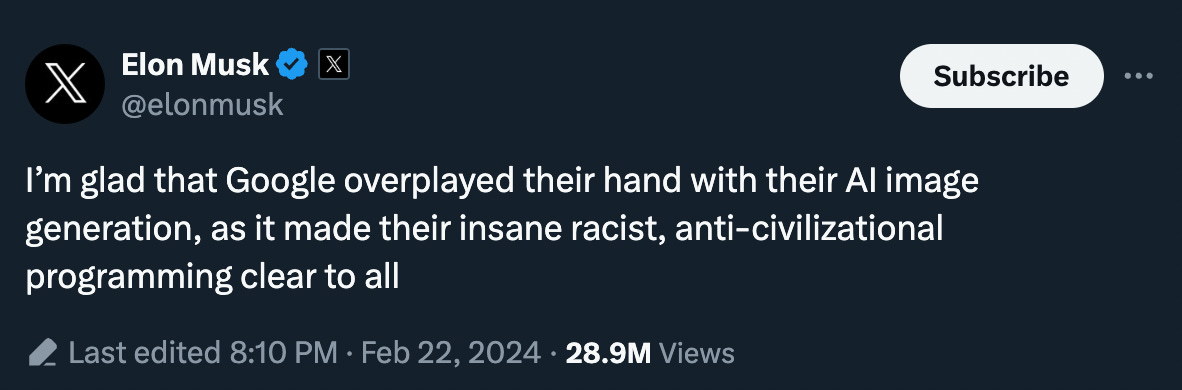

Gemini’s roll-out is a kind and stark reminder that as we seek to build useful and great things we must choose the path of truth over trying to appease and not hurt people’s feelings. This is why young and early-stage startups oftentimes have a distinct advantage as they simply do not have time to placate everyone; they are in a battle for survival and have to choose sides very early on in their development process or they simply die due to time and lack of resources. Elon tweeted this yesterday in a response to the absurdities:

I do not think that Twitter’s GROK AI is invincible to the challenges of bias but they probably have a better shot at staying the course of truth than any of the other more popular products. We’ll see, of course.

Naturally, there are some folks are doing what they can to expose inherent bias and one example that came across my desk yesterday was this one called “Are you Blacker than ChatGPT” seems tongue-in-cheek but is actually a quiz game developed by an ad agency to test a person’s knowledge of Black Culture against what ChatGPT has been trained to know about the black community:

In the end I believe that it’s our individual and collective job to spot the bias and then call it out, as publicly as we can. The best AI will be one that gives the best and most truthful outcomes, not based on (cultural) bias or corporate bureaucracy. I think our work is only beginning and it’s important that we all participate, especially since I believe so much of our own personal and professional workflows will use this technology more and more as time progresses.

If we’re using broken datasets then what can be believe about our work and the outcomes we’re trusting it for? It is quite a serious matter.

Finally, it’s worth remembering that there will always be work that no computer can do:

My skills and your skills are irreplaceable; it’s just that they are changing. Good luck my friends and here’s to a future of less AI bias and more truthful outputs.

※\(^o^)/※

— Summer

A few more updates on this mess of a problem:

This guy is apparently a “mediocre” product manager overseeing the platform:

I can’t even with some of these outputs:

Elon shares a lot of sentiments that other folks have about all of this:

Can it get even worse? Probably. I’ll share more results as I see them.

Sad to see the current political biases and narratives showing up here. It’s evident that many of our society’s racial issues are stemming from large greedy corporations funded by even larger pockets in the US government. It trickles down and affects the average American in everyday life. This is such a perfect example of this.