8 Generative AI Music Tools, Research, and Interviews You Should Listen To (and Try)

Is artificial intelligence and music the next tech revolution?

Morning y’all!

After yesterday’s more academic-ish post on the 6 different conversation types — useful for folks building GenAI products — I wanted to share something a bit more fun and that I’ve been tracking for some time.

🎵 — Music! Boop, beep, boop, beep!

And no, I’m not a musician but I can’t seem to work effectively without some good tunes flowing through my head (and I sleep with a playlist in the background). The following are a handful of music-centric products, services, and research that I’ve been collecting over the past few months that you might find useful. Enjoy!

※\(^o^)/※

— Summer

Google MusicFX is an experimental technology that allows you to generate your own music. Powered by Google's MusicLM, it uses DeepMind’s “novel watermarking technology” and SynthID to embed a digital watermark in the outputs.

Wondercraft has a handful of powerful tools that are audio-centric but not necessarily directly related to music, except for the ability to create (guided) music-based meditations and audiobooks. Their platform was rich enough for it to deserve a simple mention so I’m sharing it for y’all. Take a look:

Suno is one of the first generative AI tools that I encountered and the results were immediately impressive. With a simple interface you can start putting together completely original songs without much effort.

I created these two songs in less than a minute with this simple prompt:

a lofi song for listening to while writing that includes light lyrics about creating content for an awesome community.

I won’t lie as I was stunned at how simple this was to perform.

Here’s an example of someone who created a longer-form music with visuals:

So, so good.

Next up is some research (and open source code) on how to best identify singing voices and extract that data for use. Here’s the abstract (and the .pdf below).

Significant strides have been made in creating voice identity representations using speech data. However, the same level of progress has not been achieved for singing voices. To bridge this gap, we suggest a framework for training singer identity encoders to extract representations suitable for various singing-related tasks, such as singing voice similarity and synthesis.

Their dataset used a large private corpus of professionally recorded singing voice data containing approximately 25,000 tracks, totaling 940 hours of audio data. The dataset consists of isolated vocals of re-recordings of popular songs by 5,700 artists and includes a variety of singing styles, voice types, lyrics, and audio effects.

Cool!

The above is an interview with Ben Camp, the Associate Professor of Songwriting at Berklee College of Music and he shares his candid thoughts on the future of music and generative AI. Worth a listen if this is something you’re fascinated by!

He’s calling it the “next tech revolution” and I think there’s some real truth to that.

Micah Berkley discovered this neat music analysis tool, show above, via @sake_min that maps out the structure of any song and breaks it down into the Intro, Verse, Chorus, Bridge, and Outro (and it does this visually).

An API is coming out at some point as well. I can imagine this to be exceedingly useful for those who are thinking about not just writing music but also building products to help others do the same.

Musicfy allows you to create music with just your voice! Yup, that’s it. That’s the entire premise and product. It’s got a great domain too. Here’s an overview:

Sounds like fun (pun intended).

Have fun with these and let me know in the comments if there are any other tools you’ve found that I should check out!

Have a great Wednesday!

✌(-‿-)✌

— Summer

I’ll continue to add more resources related to music as well! Here are 2 more that I found just the other day:

More research related to music generation fine-tuned from human feedback:

We propose MusicRL, the first music generation system finetuned from human feedback. Appreciation of text-to-music models is particularly subjective since the concept of musicality as well as the specific intention behind a caption are user-dependent (e.g. a caption such as "upbeat work-out music" can map to a retro guitar solo or a techno pop beat).

You can read the paper here.

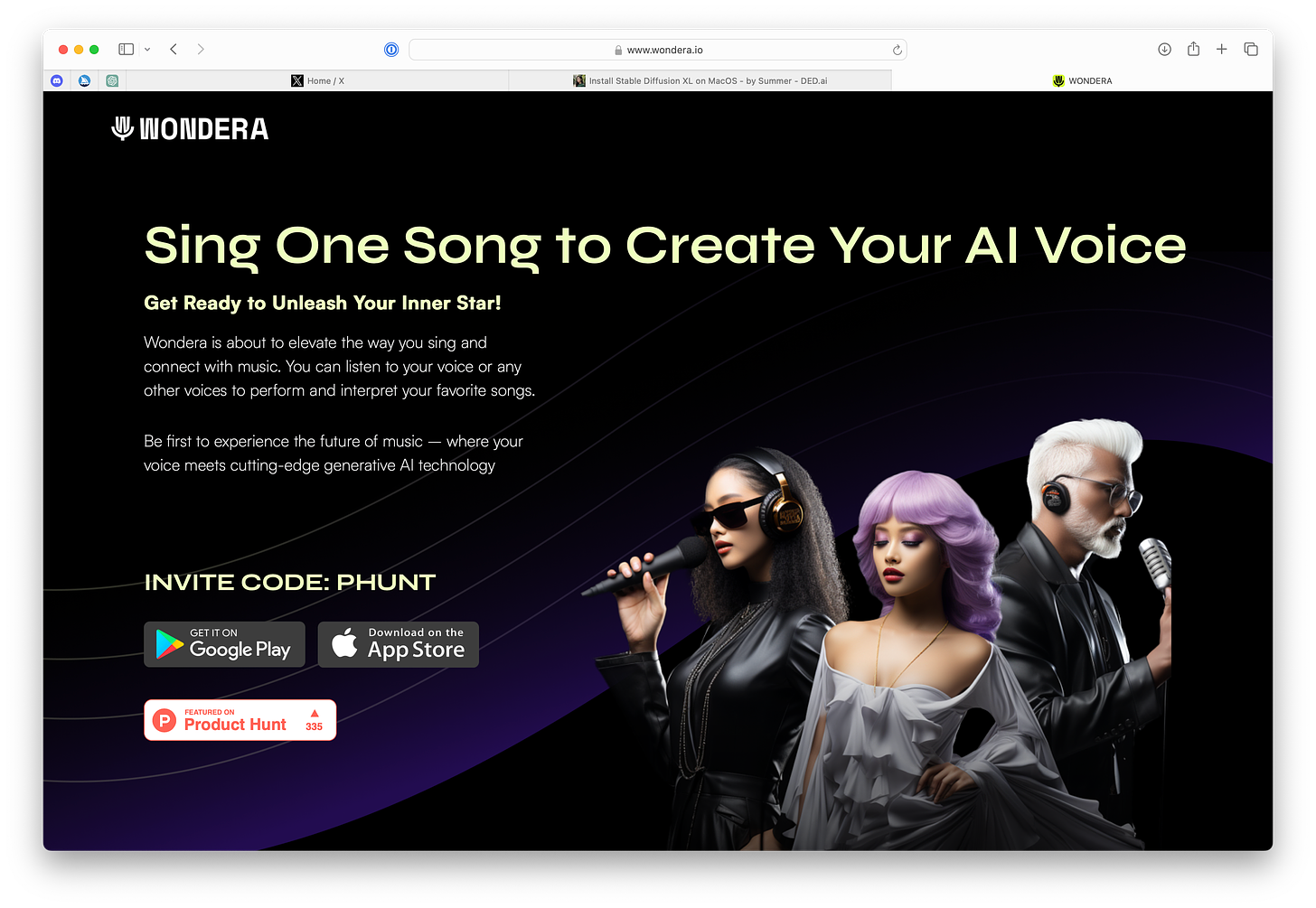

Wondera is all about music and karaoke so you can sing any song with your generative artificial intelligence voice. Sounds like fun (pun intended).

Stable Audio has research, a demos, and code for those interested in generating long-form 44.1kHz stereo audio from text prompts that includes sound effects.